Technical Paper Title: Human Computer Interfacing

Authors: M. SAI KRISHNA & B.VENKATESH BABU, 1st BTech, CSE

College: Prakasam Engineering College, Kandukur

PREAMBLE:

This paper was mainly intended for the blind people who could not make use of computer efficienly, who could just interact with computer only through the braille display or the knowledge of typing. In order to facilitate them to constrict the vulnerabilities that peep out through, while engaging with system security and data security (giving wrong passwords) and other security threaten applications our paper could serve as an alternative.

ABSTRACT:

The Acronym for jaws is “Job Access with Speech” .The first part of this paper introduces the necessity of refreshable Braille display, device which rests on the keyboard by means of raising dots through holes in flat. .The second part focuses on the fundamentals of the speech synthesis which converts text to speech, and the speech units. The third part portrays the speech production by the vocal chords and acoustic phonetics. The next part is dealt with the detailed description on various converted speech units (words, sylablles, demisylablles, diphone, allophone, phoneme), the way in which these primitive speech units are combined to form a full speech with phonetic sounds. The process indulged in the analysis of speech signals and the various synthesis methods, the coding of vocal tract parameters using Linear Predictive Coding (LPC) synthesis with its block diagram. The fifth part is all about Automatic Speech Recognition (ASR) principles and the stages involved in it, speech unit’s synthesis influencing factors .The later part discusses the signal processing front end and parameterisation and their feature, blocking and windowing.

The sixth part discusses the various speech recognition techniques, and finally the conclusion part followed by bibliography.

INDEX

Introduction

Why JAWS

Fundamentals of speech synthesis

Speech units

Speech production and Acoustic units Synthesis methods

INTRODUCTION:

The refreshable Braille display is an electro-mechanical device for displaying Braille characters, usually by means of raising dots through holes in a flat surface. The display sits under the computer keyboard.

It is used to present text to computer users who are blind and cannot use a normal computer monitor. Speech synthesizers are also commonly used for the same task, and a blind user may switch between the two systems depending on circumstances. Because of the complexity of producing a reliable display that will cope with daily wear and tear, these displays are expensive. Usually, only 40 or 80 Braille cells are displayed. Models with 18-40 cells exist in some note taker devices. On some models the position of the cursor is represented by vibrating the dots, and some models have a switch associated with each cell to move the cursor to that cell directly. The mechanism which raises the dots uses the piezo effect. Some crystals expand, when a voltage is applied to them. Such a crystal is connected to a lever, which in turn raises the dot. There has to be such a Crystal for each dot of the display, i.e., eight crystals per character.

The software that controls the display is called a screen reader. It gathers the content of the screen from the operating system converts it into Braille characters and sends it to the display. Screen readers for graphical operation systems are especially complex, because graphical elements like windows and slide bars have to be interpreted and described in text form. A new development, called the rotating wheel Braille display, was developed in 2000 by the National Institute of Standards and Technology

And is still in the process of commercialization. Braille dots are put on the edge of a spinning wheel, which allows the blind user to read continuously with a stationary finger while the wheel spins at a selected speed. The Braille dots are set in a simple scanning style fashion as the dots on the wheel spins past a stationary actuator that sets the Braille characters. As a result, manufacturing complexity is greatly reduced and rotating wheel Braille display will be much more inexpensive than traditional Braille displays.

|

FUNDAMENTALS OF SPEECH SYNTHESIS:

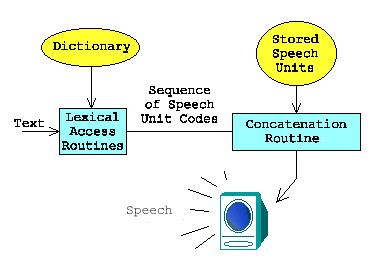

Speech synthesis is the automatic generation of speech waveforms based on an input text to synthesize and on previously analyzed digital speech data. The critical issues for current speech synthesizers concerns trade-offs among the conflicting demands of maximizing the quality of speech, while minimizing memory space, algorithmic complexity, and computation speed. The text might be entered by keyboard or optical character recognition or obtained from a stored database. Speech synthesizers then convert the text into a sequence of speech units by lexical access routines. Using large speech units such as phrases and sentences can give high quality output speech but requires much more memory. A block diagram of the steps in speech synthesis is shown below.

|

| Units | Quantity | Descriptions | Advantages/Disadvantages | |

| Words | 300,000 (50,000) |

The fundamental units of a sentence. | Advantages:

Yield high quality speech Simple concatenation synthesis algorithm Disadvantages: Limited by the memory requirement Need to adjust the duration of word |

|

| Syllables | 20,000 (4400) |

It consists of a nucleus | Disadvantage:

Syllable boundary is uncertain |

|

| Demisyllable | 4500 (2000) |

It is obtained by dividing syllables in half, with the cut during the vowel, where the effects of co articulation are minimal. | Advantages:

Preserve the transition between adjacent phones Simple smoothing rules Produce smooth speech |

|

| Diphone | 1500 (1200) |

It is obtained by dividing a speech waveform into phone-sized units, with the cuts in the middle of each phone. | Advantages:

Preserve the transition between adjacent phones Simple smoothing rules produce smooth speech |

|

| Allophone | 250 | They are phonemic variants. | Advantage:

Reduce the complexity of the interpolation algorithm comparing to phonemes |

|

| Phonemes | 37 | It is the fundamental unit of phonology. | Advantages:

Memory requirement is small Disadvantages: Require complex smoothing rules to represent the co articulation effect need to adjust intonation at each Context |

|

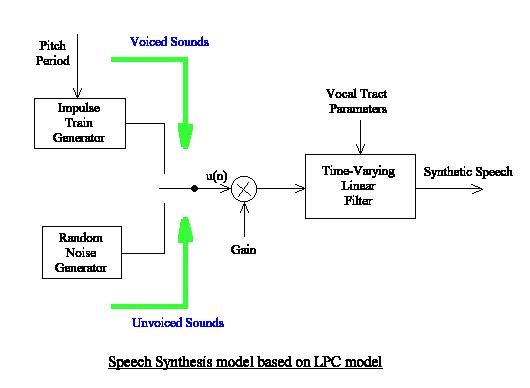

Speech units are retrieved and concatenated to output the synthetic speech. Speech is often modeled as the response of a time varying linear filter (corresponding to the vocal tract from the glottis to the lips) to an excitation waveform consisting of broadband noise, a periodic waveform of pulses, or a combination. In summary, the most important components of a synthesizer’s algorithm are

1.Stored speech units: storage of speech parameters, obtained from natural speech in terms of speech units.

2.Concatenation routines: A program rules to concatenate these units, smoothing the parameters to create time trajectories. Real time speech synthesizer has been available for many years. Such speech is generally intelligible, but lack of natural ness. Quality inferior to that of human speech is usually due to inadequate modeling of three aspects of human speech production: coarticulation, intonation and vocal-tract excitation. Most synthesizers reproduce speech bandwidth in the range of 0-3 KHZ (e.g. for telephone applications) Or 0-5 KHZ (eg. For high quality).

Frequencies up to 3 kHz are sufficient for vowel perception since vowels are adequately specified by the first three formants.

SPEECH UNITS:

The choices of speech units determine the storage memory required and the quality of the synthetic speech. Some possible units are described in the table given below. The number in the bracket under the column “quantity” is the sufficient numbers of sub word units describe all English words.

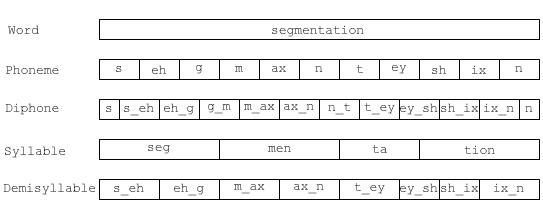

Consider the English word “segmentation”. Its representation according to each of the above sub word unit sets is:

SPEECH PRODUCTION AND ACOUSTIC-PHONETICS:

The English phonemes can be classified according to how they are produced by vocal organ. An understanding of speech production mechanism will help us to analyze the speech sounds. Acoustic phonetics is the study of the physics of the speech signal. When sound travels through the air from the speaker’s mouth to the hearer’s ear it does so in the form of vibrations in the air. It is possible to measure and analyze these vibrations by mathematical techniques studied in physics of sound.

SPEECH UNITS:

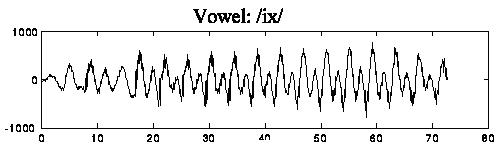

Listener into a sequence of words and sentences converts the acoustic signals. The most familiar language units are words. They can be thought of as a sequence of smaller linguistic units, phoneme, which are the fundamental units of phonology. We will use ARPABET symbols in the rest of this paper. The easiest way to understand the nature of phonemes is to consider a group of words like “hid”, “ head “, “hood”, and “hod”. All these words are made up of an initial, middle, and a final element. In our examples, the initial and final elements are identical, but the middle elements are different. It is the different in this middle element that distinguish the four words. Similarly, we can find those sounds, which differentiate one word from another for all the words of a language. Such distinguishing sounds are called phonemes. There are about fifty phoneme units in English. The following figure shows a segment of vowel /ix/. The quasi-periodicity (almost periodic) of voiced speech can be observed.

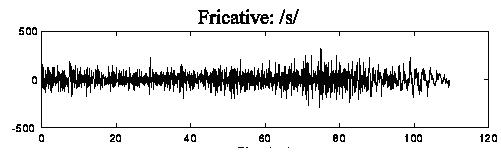

Fricative sounds are generated by constricting the vocal tract at some point along the vocal tract and forcing the air stream to flow through at a high enoughVelocity to produce turbulence. This turbulent air sounds like a hiss e.g. /hh/ or /s/.

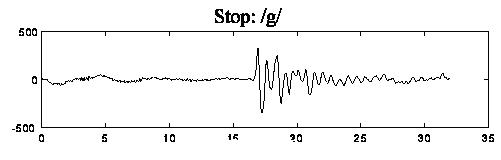

Plosive or stop sounds are resulted from blocking the vocal tract by closing the lips and nasal cavity, allowing the air pressure to build up behind the closure, and following by a sudden release of it. This mechanism produces sounds like /p/ and /g/. The following figure shows the stop /g/. The silence before the burst is the stop closure.

Acoustic Phonetics Analysis of Speech Signals:

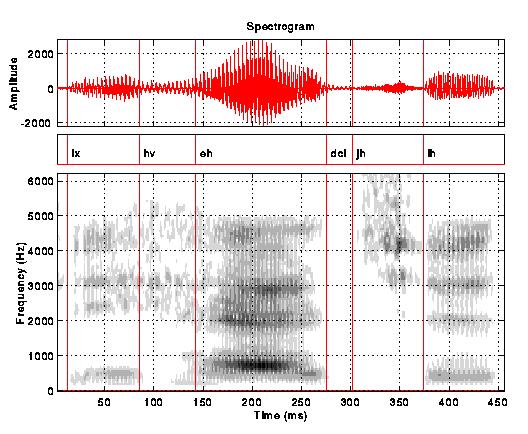

Based on the knowledge of the speech production mechanism and the study of acoustic phonetics,we are able to extract a set of features,which can best represent a particular phoneme. One of the popular techniques is the spectrogram,which describe how the frequency contents of a speech signal change with time. Suppose we have a signal,which is sampled at 16Khz, the typical steps to compute the spectrogram are described as follows. The speech is blocked by Hamming window with duration of 256 samples. A 56-point FFT( fast Fourier transform) is applied to each windowed speech. The power spectra in dB are plotted. Horizontal bars in the spectrogram display the format frequencies while the vertical lines there indicate the pitch period( i.e. the inverse of the fundamental frequency).

Synthesis Methods:

Synthesis can be classified by how they parameterize the speech for storage and synthesis.

1. Waveform Synthesizers

2. Terminal Analog Synthesizers

3. Articulaticulatory Synthesizers

4. Formant Synthesizers

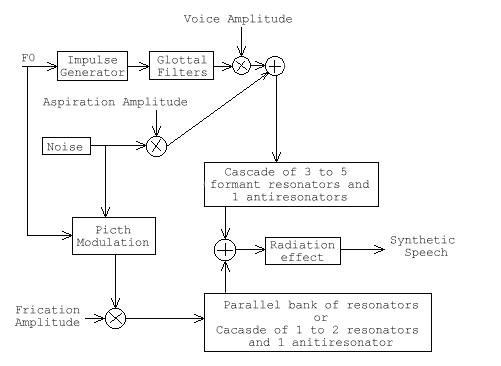

Formant Synthesisers:

Formant synthesizer employs formant resonances and bandwidths to represent the stored spectrum. The vocal tract filter is usually represented by 10-23 spectral parameters. Formant synthesizers have an advantage over LPC systems in that bandwidths can be more easily manipulated and that zeros can be directly introduced into the filter. However, locating the popes and zeros automatically in natural speech is a more difficult task than automatic LPC analysis. Most of formant synthesizers use a model similar to that shown below.

Fig: Block Diagram Of Format Synthesizer

Conclusion:

Finally, an effective, interactive communicative medium has been deviced with the glimpse of Human Computer Interface using the Braille displays. Speaker independent speech synthesizers could presume highly sophisticated algorithms, which consume much time and space complexities.

Thus the JAWS persuade the blind people to make use of computer as usual as a normal man.

Biblography:

The following websites have been taken for reference: