Technical Paper Title: Speech Processing, Speech Synthesis & Speech Recognition

Authors:S.Akarsh & K.Avinash, 2nd year BTech,CSE

College: Padmasri Dr.B.V.Raju Institute of Technology, Medak

Abstract:-

Speech recognition is an input system that consists of a microphone and speech recognition software. Speech recognition software is complex. Sounds received via a microphone must be broken down into phonemes; then, a “best guess” algorithm is used to match phonemes and syllables to a database of possible words. To solve speech inconsistencies like accent, volume, pitch, and inflection, male vs. female, sarcasm, and colloquialisms, the software must examine context and word patterns to determine the correct meaning. This requires an enormous database, which in turn requires a computer with very capable processing speed and memory. Speech recognition will greatly change the way information is input. Ease, convenience, and speed are three advantages to using a microphone instead of a keyboard. Speech recognition technology will have an impact on all careers but especially education. Business Education teachers must stay abreast of this technology and be willing to adjust their courses to best prepare students.

Introduction:-

Speech recognition will become a primary interface, speech or voice recognition software had to be run separately. Speech will be the primary interface for computers.

Speech recognition

Speech or voice recognition is the technology that allows for computer input via spoken commands. To use speech recognition, your computer needs a microphone and the proper software. In this duo, the software is the complex part. Speech recognition software works by disassembling sound into atomic units (called phonemes) and then piecing them back together. Phonemes can be thought of as the sound made by one or more letters in sequence with other letters, like th, cl, or dr. After speech recognition software has broken sounds into phonemes and syllables, a “best guess” algorithm is used to map the phonemes and syllables to actual words. These words are then translated into ideas by natural language processing. Natural language processing has the ability to process the output from speech recognition software and understand what the user meant. Natural language processing attempts to translate words into ideas by examining context, patterns, phrases, etc. Natural language processing and speech recognition work together to clear-up vague words or homonyms, i.e. Two, too, and to.

Speech Recognition:-

Understanding of speech by computer: a system of computer input and control in which the computer can recognize spoken words and transform them into digitized commands or text. With such a system, a computer can be activated and controlled by voice commands or take dictation as input to a word processor or a desktop publishing system.

Speech synthesis:-

computer’s imitating of speech: computer-generated audio output that resembles human speech

A computer-controlled recording system in which basic sounds, numerals, words, or phrases are individually stored for playback under computer control as the reply to a keyboarded query.

The process of generating an acoustic speech signal that communicates an intended message, such that a machine can respond to a request for information by talking to a human user. Also known as speech synthesis.

Speech signals:-

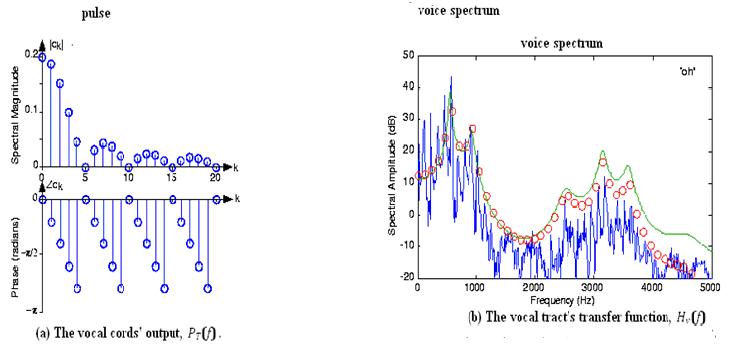

Since speech signals are periodic, speech has a Fourier series representation given by a linear circuit’s response to a periodic signal. Because the acoustics of the vocal tract are linear, we know that the spectrum of the output equals the product of the pitch signal’s spectrum and the vocal tract’s frequency response. We thus obtain the fundamental model of speech production.

S9f)=Pt(f)Hv(f)……………..(1)

Here, Hv (f) is the transfer function of the vocal tract system. The Fourier series for the vocal cords’ output,

and is plotted in the left in Figure 1. If we had, for example, a male speaker with about a 150 Hz pitch( T≈6.7ms) saying the vowel “oh”, the spectrum of his speech predicted by our model would be as shown on the right in Figure 1.The model spectrum idealizes the measured spectrum, and captures all the important features. The measured spectrum certainly demonstrates what are known as pitch lines, and we realize from our model that they are due to the vocal cord’s periodic excitation of the vocal tract. The vocal tract’s shaping of the line spectrum is clearly evident, but difficult to discern exactly. The model transfer function for the vocal tract makes the formants much more readily evident.

Ability of computer systems to accept speech input and act on it or transcribe it into written language. Current research efforts are directed toward applications of automatic speech recognition (ASR), where the goal is to transform the content of speech into knowledge that forms the basis for linguistic or cognitive tasks, such as translation into another language. Practical applications include database-query systems, information retrieval systems, and speaker identification and verification systems, as in telebanking. Speech recognition has promising applications in robotics, particularly development of robots that can “hear.”

Figure 1: the vocal tract’s transfer function (on the right),shown as the thin, smooth line, is supper imposed on the spectrum of actual male speech corresponding to the sound “oh” .The spectrum model’s output is shown as the sequence of circles, which indicate the amplitude and frequency of each Fourier series coefficient.

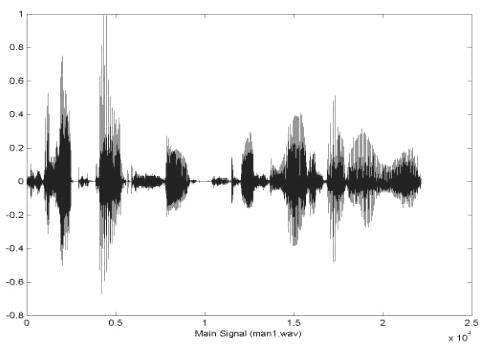

Speech processing: – is the study of speech signals and the processing methods of these signals.

The signals are usually processed in a digital representation, so speech processing can be regarded as a special case of digital signal processing, applied to speech signal.

It is also closely tied to natural language processing (NLP), as its input can come from / output can go to NLP applications. E.g. text-to-speech synthesis may use a syntactic parser on its input text and speech recognition’s output may be used by e.g. information extraction techniques.

Speech processing can be divided into the following categories:

• Speech recognition, which deals with analysis of the linguistic content of a speech signal.

• Speaker recognition, where the aim is to recognize the identity of the speaker.

• Enhancement of speech signals, e.g. audio noise reduction.

• Speech coding, a specialized form of data compression, is important in the telecommunication area.

• Voice analysis for medical purposes, such as analysis of vocal loading and dysfunction of the vocal cords.

• Speech synthesis: the artificial synthesis of speech, which usually means computer-generated speech.

• Speech enhancement: enhancing the perceptual quality of a speech signal by removing the destructive effects of noise, limited capacity recording equipment, impairments, etc.

Design:-

Voice analysis is the study of speech sounds for purposes other than linguistic content, such as in speech recognition. Such studies include mostly medical analysis of the voice i.e. phoniatrics, but also speaker identification. More controversially, some believe that the truthfulness or emotional state of speakers can be determined using Voice Stress Analysis or Layered Voice Analysis.

Vocal loading is the stress inflicted on the speech organs when speaking for long periods.

The vocal folds, also known commonly as Vocal cords, are composed of twin infoldings of mucous membrane stretched horizontally across the larynx. They vibrate, modulating the flow of air being expelled from the lungs during phonation.

Speech signal processing refers to the acquisition, manipulation, storage, transfer and output of vocal utterances by a computer. The main applications are the recognition, synthesis and compression of human speech:

• Speech recognition (also called voice recognition) focuses on capturing the human voice as a digital sound wave and converting it into a computer-readable format.

• Speech synthesis is the reverse process of speech recognition. Advances in this area improve the computer’s usability for the visually impaired.

• Speech compression is important in the telecommunications area for increasing the amount of information which can be transferred, stored, or heard, for a given set of time and space constraints.

Figure below: –

Diagrammatic representation of speech engine

Working:

Early speech recognition systems tried to apply a set of grammatical and syntactical rules to speech. If the words spoken fit into a certain set of rules, the program could determine what the words were. However, human language has numerous exceptions to its own rules, even when it’s spoken consistently. Accents, dialects and mannerisms can vastly change the way certain words or phrases are spoken. Imagine someone from Boston saying the word “barn.” He wouldn’t pronounce the “r” at all, and the word comes out rhyming with “John.” Or consider the sentence, “I’m going to see the ocean.” Most people don’t enunciate their words very carefully. The result might come out as “I’m goin’ da see Tha Ocean.” They run several of the words together with no noticeable break, such as “I’m goin'” and “the ocean.” Rules-based systems were unsuccessful because they couldn’t handle these variations. This also explains why earlier systems could not handle continuous speech — you had to speak each word separately, with a brief pause in between them.

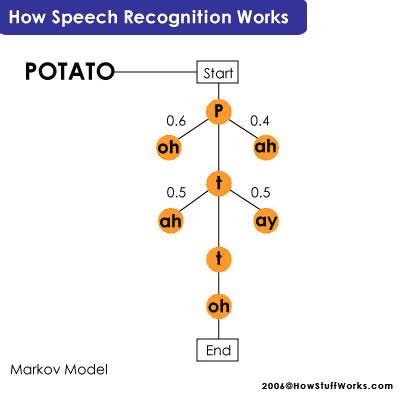

Today’s speech recognition systems use powerful and complicated statistical modeling systems. These systems use probability and mathematical functions to determine the most likely outcome. According to John Garofolo, Speech Group Manager at the Information Technology Laboratory of the National Institute of Standards and Technology, the two models that dominate the field today are the Hidden Markov Model and neural networks. These methods involve complex mathematical functions, but essentially, they take the information known to the system to figure out the information hidden from it.

The Hidden Markov Model is the most common, so we’ll take a closer look at that process. In this model, each phoneme is like a link in a chain, and the completed chain is a word. However, the chain branches off in different directions as the program attempts to match the digital sound with the phoneme that’s most likely to come next. During this process, the program assigns a probability score to each phoneme, based on its built-in dictionary and user training.

This process is even more complicated for phrases and sentences — the system has to figure out where each word stops and starts. The classic example is the phrase “recognize speech,” which sounds a lot like “wreck a nice beach” when you say it very quickly. The program has to analyze the phonemes using the phrase that came before it in order to get it right. Here’s a breakdown of the two phrases:

r eh k ao g n ay z s p iy ch

“recognize speech”

r eh k ay n ay s b iy ch

“wreck a nice beach”

Why is this so complicated? If a program has a vocabulary of 60,000 words (common in today’s programs), a sequence of three words could be any of 216 trillion possibilities. Obviously, even the most powerful computer can’t search through all of them without some help.

That help comes in the form of program training. According to John Garofolo:

These statistical systems need lots of exemplary training data to reach their optimal performance — sometimes on the order of thousands of hours of human-transcribed speech and hundreds of megabytes of text. These training data are used to create acoustic models of words, word lists, and […] multi-word probability networks. There is some art into how one selects, compiles and prepares this training data for “digestion” by the system and how the system models are “tuned” to a particular application. These details can make the difference between a well-performing system and a poorly-performing system — even when using the same basic algorithm.

While the software developers who set up the system’s initial vocabulary perform much of this training, the end user must also spend some time training it. In a business setting, the primary users of the program must spend some time (sometimes as little as 10 minutes) speaking into the system to train it on their particular speech patterns. They must also train the system to recognize terms and acronyms particular to the company. Special editions of speech recognition programs for medical or legal offices have terms commonly used in those fields already trained into them.

Applications:-

- Educational Institutions

- Tele Banking

- Password Security

- Guiding visually challenged

- Speaker Recognition

- Health care

- High-performance fighter aircraft

- Battle Management

- Training air traffic controllers

- Hands free computing

Devices used for Speech Recognition:-

- Microphone(mic)

- Sound cards

- Data compression

- Tele communication(Skype, yahoo messenger)

Speech Recognition:-

Weaknesses and Flaws:-

No speech recognition system is 100 percent perfect; several factors can reduce accuracy. Some of these factors are issues that continue to improve as the technology improves. Others can be lessened — if not completely corrected — by the user.

- Low signal-to-noise ratio

- Overlapping speech

- Intensive use of computer power

- Homonyms(eg:’their’,’air’)

Graphs:-

Conclusion:-

(Future of Speech Recognition):-

The first developments in speech recognition predate the invention of the modern computer by more than 50 years. Alexander Graham Bell was inspired to experiment in transmitting speech by his wife, who was deaf. He initially hoped to create a device that would transform audible words into a visible picture that a deaf person could interpret. He did produce spectrographic images of sounds, but his wife was unable to decipher them. That line of research eventually led to his invention of the telephone.

For several decades, scientists developed experimental methods of computerized speech recognition, but the computing power available at the time limited them. Only in the 1990s did computers powerful enough to handle speech recognition become available to the average consumer. Current research could lead to technologies that are currently more familiar in an episode of “Star Trek.” The Defense Advanced Research Projects Agency (DARPA) has three teams of researchers working on Global Autonomous Language Exploitation (GALE), a program that will take in streams of information from foreign news broadcasts and newspapers and translate them. It hopes to create software that can instantly translate two languages with at least 90 percent accuracy. “DARPA is also funding an R&D effort called TRANSTAC to enable our soldiers to communicate more effectively with civilian populations in non-English-speaking countries,” said Garofolo, adding that the technology will undoubtedly spin off into civilian applications, including a universal translator.

A universal translator is still far into the future, however — it’s very difficult to build a system that combines automatic translation with voice activation technology. According to a recent CNN article, the GALE project is “‘DARPA hard’ [meaning] difficult even by the extreme standards” of DARPA. Why? One problem is making a system that can flawlessly handle roadblocks like slang, dialects, accents and background noise. The different grammatical structures used by languages can also pose a problem. For example, Arabic sometimes uses single words to convey ideas that are entire sentences in English.

At some point in the future, speech recognition may become speech understanding. The statistical models that allow computers to decide what a person just said may someday allow them to grasp the meaning behind the words. Although it is a huge leap in terms of computational power and software sophistication, some researchers argue that speech recognition development offers the most direct line from the computers of today to true artificial intelligence. We can talk to our computers today. In 25 years, they may very well talk back.

Summary:-

Speech recognition will revolutionize the entire field of human-computer interaction. People will be allowed the freedom of moving about in relatively close proximity to a computer and not confined to their chair and desk. Input speeds will be increased because of the ability to speak rather than key. Eventually, the computer could act on our behalf, such as searching for information on the Internet while we sleep or go to work. After this step, true artificial intelligence will not be too far behind.

References:-

1) Gilbert, H. (1996).Input Devices.

2) Voice recognition technology. Business Education Forum, 30-32.

3) Machoski, M. (1998).Speech recognition and Natural Language Processing as a highly effective means of human-computer interaction.

4) Computer Microphone Products and Accessories. Shure Voice Recognition and speech Input Microphone. (1998).

Website links:-

1) http://www.cybernorth.com/voicerecognition/speech.html

2) http://www.infoworld.com/cgibin/displayArchive.pl?/97/45/e02-45.131.htm