Technical Paper Title: BLUE EYES

Authors:Maram B M RAJESH KUMAR, 3rd Year BTech,CSE

College: SRI INDU COLLEGE OF ENGINEERING AND TECHNOLOGY

ABSTRACT

Is it possible to create a computer which can interact with us as we interact each other? For example imagine in a fine morning you walk on to your computer room and switch on your computer, and then it tells you Hey friend, good morning you seem to be a bad mood today. And then it opens your mail box and shows you some of the mails and tries to cheer you. It seems to be a fiction, but it will be the life lead by BLUE EYES in the very near future.

The basic idea behind this technology is to give the computer the human power. We all have some perceptual abilities. That is we can understand each other’s feelings. For example we can understand ones emotional state by analyzing his facial expression. If we add these perceptual abilities of human to computers would enable computers to work together with human beings as intimate partners. The BLUE EYES technology aims at creating computational machines that have perceptual and sensory ability like those of human beings.

INTRODUCTION

Imagine yourself in a world where humans interact with computers. You are sitting in front of your personal computer that can listen, talk, or even scream aloud. It has the ability to gather information about you and interact with you through special techniques like facial recognition, speech recognition, etc. It can even understand your emotions at bthe touch of the mouse. It verifies your identity, feels your presents, and starts interacting with you .You ask the computer to dial to your friend at his office. It realizes the urgency of the situation through the mouse, dials your friend at his office, and establishes a connection.

Human cognition depends primarily on the ability to perceive, interpret, and integrate audio-visuals and sensoring information. Adding extraordinary perceptual abilities to computers would enable computers to work together with human beings as intimate partners. Researchers are attempting to add more capabilities to computers that will allow them to interact like humans, recognize human presents, talk, listen, or even guess their feelings.

The BLUE EYES technology aims at creating computational machines that have perceptual and sensory ability like those of human beings. It uses non-obtrusige sensing method, employing most modern video cameras and microphones to identifies the users actions through the use of imparted sensory abilities . The machine can understand what a user wants, where he is looking at, and even realize his physical or emotional states.

In the name of BLUE EYES Blue in this term stands for Blue tooth (which enables wireless communication) and eyes because eye movement enables us to obtain a lot of interesting and information.

SYSTEM OVERVIEW

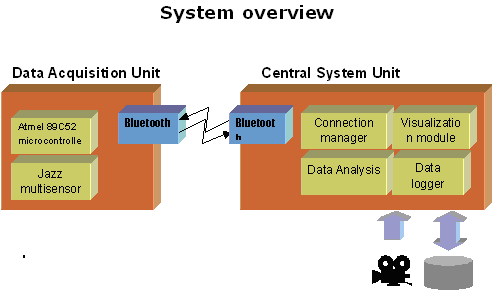

BLUE EYES system mainly consists of Mobile measuring Device called Data Acquisition unit (DAU) and a central analytical system called central system unit(CSU).

DATA ACQISITION UNIT

Data Acquisition Unit are to maintain Bluetooth connections to get information from sensor and sending it over the wireless connection to deliver the Alarm Messages sent from the Central System Unit to the operator and handle personalized ID cards

CENTARL SYSTEM UNIT

CSU maintains other side of the Blue tooth connection, buffers incoming sensor data, performs online data analysis records conclusion for further exploration and provides visualization interface.

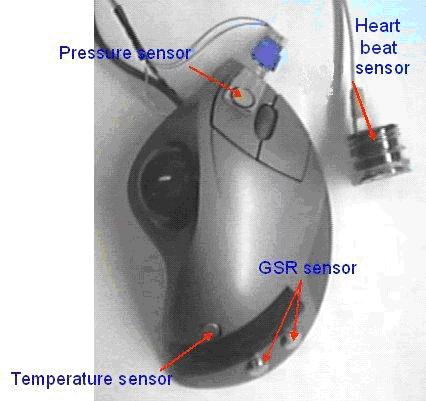

EMOTION MOUSE

One goal of human computer interaction (HCI) is to make an adaptive, smart computer system. This type of project could possibly include gesture recognition, facial recognition, eye tracking, speech recognition, etc. Another non-invasive way to obtain information about a person is through touch. People use their computers to obtain, store and manipulate data using their computer. In order to start creating smart computers, the computer must start gaining information about the user. Our proposed method for gaining user information through touch is via a computer input device, the mouse. From the physiological data obtained from the user, an emotional state may be determined which would then be related to the task the user is currently doing on the computer. Over a period of time, a user model will be built in order to gain a sense of the user’s personality. The scope of the project is to have the computer adapt to the user in order to create a better working environment where the user is more productive

E-MOTION MOUSE

EMOTION AND COMPUTING

Emotion detections an important step to an adaptive computer system. An adaptive, smart computer system has been driving our efforts to detect a person’s emotional state. An important element of incorporating emotion into computing is for productivity for a computer user.

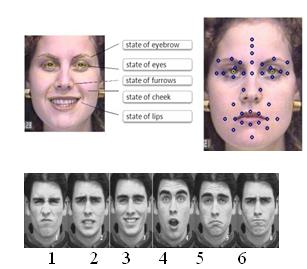

Six participants were trained to exhibit the facial expressions of the six basic emotions.While each participant exhibited these expressions, the physiological changes associated with affect were assessed.

GEOMETRIC FACIAL DATA EXTRACTION

Finding to which emotion state does user belong to

The measures taken were GSR, heart rate, skin temperature and general somatic activity (GSA). These data were then subject to two analyses. For the first analysis, a multidimensional scaling (MDS) procedure was used to determine the dimensionality of the data. This analysis suggested that the physiological similarities and dissimilarities of the six emotional states fit within a four dimensional model. For the second analysis, a discriminant function analysis was used to determine the mathematic functions that would distinguish the six emotional states.

MANUAL AND GAZE INPUT CASCADED(MAGIC) POINTING

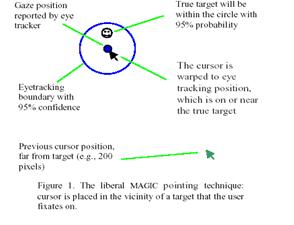

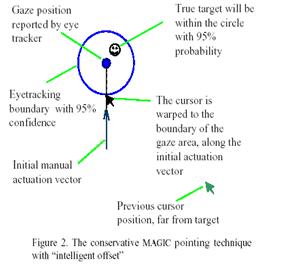

We propose an alternative approach, dubbed MAGIC (Manual And Gaze Input Cascaded) pointing. With such an approach, pointing appears to the user to be a manual task, used for fine manipulation and selection. However, a large portion of the cursor movement is eliminated by warping the cursor to the eye gaze area, which encompasses the target. Two specific MAGIC pointing techniques, one conservative and one liberal, were designed, analyzed, and implemented with an eye tracker we developed. They were then tested in a pilot study.

We have designed two MAGIC pointing techniques, one liberal and the other conservative in terms of target identification and cursor placement. The liberal approach is to warp the cursor to every new object the user looks at (See Figure 1).

The user can then take control of the cursor by hand near (or on) the target, or ignore it and search for the next target. Operationally, a new object is defined by sufficient distance (e.g., 120 pixels) from the current cursor position, unless the cursor is in a controlled motion by hand. Since there is a 120-pixel threshold, the cursor will not be warped when the user does continuous manipulation such as drawing. Note that this MAGIC pointing technique is different from traditional eye gaze control, where the user uses his eye to point at targets either without a cursor or with a cursor that constantly follows the jittery eye gaze motion.

The more conservative MAGIC pointing technique we have explored does not warp a cursor to a target until the manual input device has been actuated. Once the manual input device has been actuated, the cursor is warped to the gaze area reported by the eye tracker. This area should be on or in the vicinity of the target. The user would then steer the cursor annually towards the target to complete the target acquisition. The cursor is warped to eye tracking position, which is on or near the true target Previous cursor position, far from target (e.g., 200 pixels) Figure 1.

follow “virtual inertia:” move from the the previous cursor position and make a motion which is most convenient and least effortful to the user for a given input device.

Both the liberal and the conservative MAGIC pointing techniques offer the following potential advantages:

1. Reduction of manual stress and fatigue, since the cross screen long-distance cursor movement is eliminated from manual control.

2. Practical accuracy level. In comparison to traditional pure gaze pointing whose

accuracy is fundamentally limited by the nature of eye movement, the MAGIC pointing techniques let the hand complete the pointing task, so they can be as accurate as any other manual input techniques.

3. A more natural mental model for the user. The user does not have to be aware of the role of the eye gaze. To the user, pointing continues to be a manual task, with a cursor conveniently appearing where it needs to be.

4. Speed. Since the need for large magnitude pointing operations is less than with pure manual cursor control, it is possible that MAGIC pointing will be faster than pure manual pointing.

IMPLEMENTATION

We took two engineering efforts to implement the MAGIC pointing techniques. One was

to design and implement an eye tracking system and the other was to implement MAGIC pointing techniques at the operating systems level, so that the techniques can work with all software applications beyond “demonstration” software.

THE IBM ALMADEN EYE TRACKER

Since the goal of this work is to explore MAGIC pointing as a user interface technique,

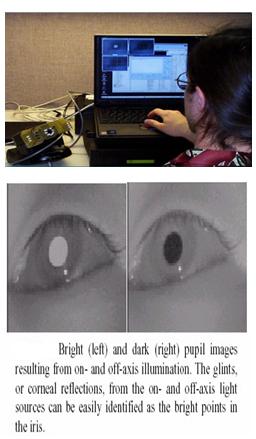

When the light source is placed on-axis with the camera optical axis, the camera is able to detect the light reflected from the interior of the eye, and the image of the pupil appears bright (see Figure 3). This effect is often seen as the red-eye in flash photographs when the flash is close to thecamera lens. Bright (left) and dark (right) pupil images resulting from on- and off-axis illumination. The glints, or corneal reflections, from the on- and off-axis light sources can be easily identified as the bright points in the iris. The Almaden system uses two near infrared (IR) time multiplexed light sources, composed of two sets of IR LED’s, which were synchronized with the camera frame rate. One light source is placed very close to the camera’s optical axis and is synchronized with the even frames. Odd frames are synchronized with the second light source, positioned off axis. The two light sources are calibrated to provide approximately equivalent whole-scene illumination. Pupil detection is realized by means of subtracting the dark pupil image from the bright pupil image. After thresholding the difference, the largest connected component is identified as the pupil. This technique significantly increases the robustness and reliability of the eye tracking system

ARTIFICIAL INTELLIGENT SPEECH RECOGNITION

It is important to consider the environment in which the speech recognition system has to work. The grammar used by the speaker and accepted by the system, noise level, noise type, position of the microphone, and speed and manner of the user’s speech are some factors that may affect the quality of speech recognition .When you dial the telephone number of a big company, you are likely to hear the sonorous voice of a cultured lady who responds to your call with great courtesy saying “Welcome to company X. Please give me the extension number you want”. You pronounce the extension number, your name, and the name of person you want to contact. If the called person accepts the call, the connection is given quickly. This is artificial intelligence where an automatic call handling System is used without employing any telephone operator.

THE TECHNOLOGY

Artificial intelligence (AI) involves two basic ideas. First, it involves studying the thought processes of human beings. Second, it deals with representing those processes via machines (like computers, robots, etc). AI is behavior of a machine, which, if performed by a human being, would be called intelligent. It makes machines smarter and more useful, and is less expensive than natural intelligence. Natural language processing (NLP) refers to artificial intelligence methods of communicating with a computer in a natural language like English. The main objective of a NLP program is to understand input and initiate action. The input words are scanned and matched against internally stored known words. Identification of a key word causes some action to be taken. In this way, one can communicate with the computer in one’s language. No special commands or computer language are required. There is no need to enter programs in a special language for creating software.

SPEECH RECOGNITION

The user speaks to the computer through a microphone, which, in used; a simple system may contain a minimum of three filters. The more the number of filters used, the higher the probability of accurate recognition. Presently, switched capacitor digital filters are used because these can be custom-built in integrated circuit form. These are smaller and cheaper than active filters using operational amplifiers. The filter output is then fed to the ADC to translate the analogue signal into digital word. The ADC samples the filter outputs many times a second. Each sample represents different amplitude of the signal .Evenly spaced vertical lines represent the amplitude of the audio filter output at the instant of sampling. Each value is then converted to a binary number proportional to the amplitude of the sample. A central processor unit (CPU) controls the input circuits that are fed by the ADCS. A large RAM (random access memory) stores all the digital values in a buffer area. This digital information, representing the spoken word, is now accessed by the CPU to process it further. The normal speech has a frequency range of 200 Hz to 7 kHz. Recognizing a telephone call is more difficult as it has bandwidth limitation of 300 Hz to3.3 kHz. As explained earlier, the spoken words are processed by the filters and ADCs. It is important to note that alignment of words and templates are to be matched correctly in time, before computing the similarity score. This process, termed as dynamic time warping, recognizes that different speakers pronounce the same words at different speeds as well as elongate different parts of the ssame word. This is important for the speaker-independent recognizers.

APPLICATIONS

One of the main benefits of speech recognition system is that it lets user do other works simultaneously. The user can concentrate on observation and manual operations, and still control the machinery by voice input commands. Another major application of speech processing is in military operations. Voice control of weapons is an example. With reliable speech recognition equipment, pilots can give commands and information to the computers by simply speaking into their microphones—they don’t have to use their hands for this purpose. Another good example is a radiologist scanning hundreds of Xrays, ultrasonograms, CT scans and simultaneously dictating conclusions to a speech recognition system connected to word processors. The radiologist can focus his attention on the images rather than writing the text. Voice recognition could also be used on computers for making airline and hotel reservations. A user requires simply to state his needs, to make reservation, cancel a reservation, or make enquiries about schedule.

THE SIMPLE USER INTERST TRACKER (SUITOR)

Computers would have been much more powerful, had they gained perceptual and sensory abilities of the living beings on the earth. What needs to be developed is an intimate relationship between the computer and the humans. And the Simple User Interest Tracker (SUITOR) is a revolutionary approach in this direction.By observing the Webpage a netizen is browsing, the SUITOR can help by fetching more information at his desktop. By simply noticing where the user’s eyes focus on the computer screen, the SUITOR can be more precise in determining his topic of interest. It can even deliver relevant information to a handheld device.

TYPES OF USERS

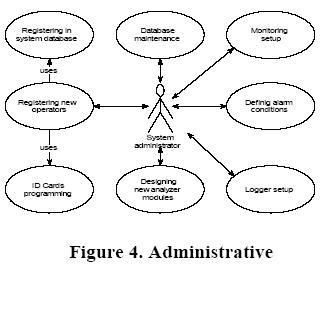

BlueEyes has three groups of users: operators, supervisors and system administrators. Operator is a person whose physiological parameters are supervised. The operator wears the DAU. The only functions offered to that user are authorization in the system and receiving alarm alerts. Such limited functionality assures the device does not disturb the work of the operator (Fig. 2).

Mobile device user

Authorization – the function is used whenthe operator’s duty starts. After inserting his personal ID card into the mobile device and entering proper PIN code the device will start listening for incoming Bluetooth connections. Once the connection has been established and authorization process has succeeded (the PIN code is correct) central system starts monitoring the operator’s physiological parameters. The authorization process shall be repeated after reinserting the ID card. It is not, however, required on reestablishing Bluetooth connection.

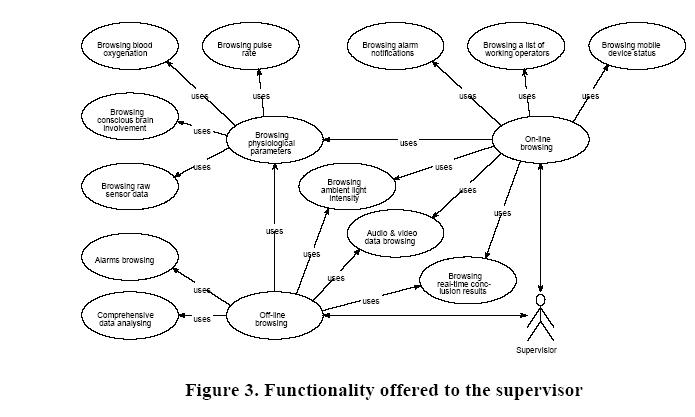

Supervisor is a person responsible for analyzing operators’ condition and performance. The supervisor receives tools for inspecting present values of the parameters (On-line browsing) as well as browsing the results of long-term analysis (Off-line browsing).

During the on-line browsing it is possible to watch a list of currently working operators and the status of their mobile devices. Selecting one of the operators enables the supervisor to check the operator’s current physiological condition (e.g. a pie chart showing active brain involvement) and a history of alarms regarding the operator. All new incoming alerts are displayed immediately so that the supervisor is able to react fast. However, the presence of the human supervisor is not necessary since the system is equipped with reasoning algorithms and can trigger user-defined actions (e.g. to inform the operator’s co-workers).

Logger setup provides tools for selecting the parameters to be recorded. For audio data sampling frequency can be chosen. As regards the video signal, a delay between storing consecutive frames can be set (e.g. one picture in every two seconds).

Database maintenance – here the administrator can remove old or “uninteresting” data from the database. The “uninteresting” data is suggested by the built-in reasoning system.

ADVANTAGES

The following are the advantages of Blue Eyes System

1. Reduce Fatigue

2. Practical Accuracy

3. Speed

APPLICATIONS

The following are the applications of the Blue Eyes System.

1. At power point control rooms.

2. At Captain Bridges

3. At Flight Control Centers

4. Professional Drivers

CONCLUSION

The Blue Eyes system is developed because of the need for a real-time monitoring system for a human operator. The approach is innovative since it helps supervise the operator not the process, as it is in presently available solutions. We hope the system in its commercial release will help avoid potential threats resulting from human errors, such as weariness, oversight, tiredness or temporal indisposition. However, the prototype developed is a good estimation of the possibilities of the final product. The use of a miniature CMOS camera integrated into the eye movement sensor will enable the system to calculate the point of gaze and observe what the operator is actually looking at. Introducing voice recognition algorithm will facilitate the communication between the operator and the central system and simplify authorization process.

Despite considering in the report only the operators working in control rooms, our solution maywell be applied to everyday life situations. Assuming the operator is a driver and the supervised process is car driving it is possible to build a simpler embedded on-line system, which will only monitor conscious brain involvement and warn when necessary. As in this case the logging module is redundant, and the Bluetooth technology is becoming more and more popular, the commercial implementation of such a system would be relatively inexpensive. These new possibilities can cover areas such as industry, transportation, military command centers or operation theaters. Researchers are attempting to add more capabilities to computers that will allow them to interact like humans, recognize human presents, talk, listen, or even guess their feelings

The final thing is to explain the name of our system. Blue Eyes emphasizes the foundations of the project – Bluetooth technology and the movements of the eyes. Bluetooth provides reliable wireless communication whereas the eye movements enable us to obtain a lot of interesting and important information.

REFERENCES

1. Carpenter R. H. S., Movements of the eyes, 2nd edition, Pion Limited, 1988, London.

2. Bluetooth specification, version 1.0B, Bluetooth SIG, 1999.

3. ROK 101 007 Bluetooth Module, Ericsson Microelectronics, 2000.

4. AT89C52 8-bit Microcontroller Datasheet, Atmel.

5. Intel Signal Processing Library –Reference Manual